A few weeks back i posted on why our mailboxes are full of spam. I mentioned there that i had created a small and very simple perl script to crawl the internet and fish for plain text emails. It was a pretty easy task since Perl is designed for being easy on text manipulation.

The procedure i used was as follows:

- I had a MySQL database for storing links to visit and emails.

- I connected to the database.

- Selected a link to visit.

- Make sure it’s not an image / pdf / google page / amazon page. If so i jumped back on step 3.

- Download the page.

- Pattern match for emails.

- Get the links within the page.

- Insert emails and new links on the database.

- Back to step 3.

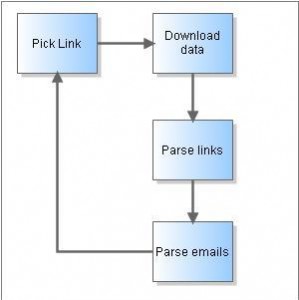

This is roughly the idea. You can see below a small flowchart to get the general idea.

I would like to demonstrate how it’s done with perl. In order for you to run it, i’d suggest you have a machine with Perl installed (preferably Linux). If you don’t have Linux then you probably have to download a perl binary for Windows. Check out this page for furhter info.

Now, let’s cut to the chase. Here are the first lines of the script:

#!/usr/bin/perl

use WWW::Mechanize;

use Data::Dumper;

use DBI;

Here we just include a couple of libraries. To be more specific we are going to use the Mechanize library in order to retrieve the pages easily and the DBI to connect to the MySQL.

my $host = "127.0.0.1";

my $database = "db";

my $username = "username";

my $password = "password";

$connectionInfo = "dbi:mysql:$database;$host";

$connection = DBI->connect($connectionInfo, $username, $password);

With this snippet we connect to the database we have. Next we need to send all the error messages to “/dev/null”. Now i did this because i had some stupid errors for 404’s and stuff which, when the script was done, i didn’t care. If you want to play with it just ignore this line in order to get all the errors printed on your screen.

open(STDERR, ">/dev/null");

And from here on we are getting to some serious stuff. We are starting a loop through all the links we need to visit. Actually this loop is going to be endless since the links are always populated with new ones. Here is the start:

while(($url = get_url($connection)) ne ""){

I will make a small break here to introduce the “get_url” function. With this one i retrieve the link from the database to visit. Here it is:

sub get_url {

my($connection) = @_;

my $stm = $connection->prepare("SELECT url FROM urls WHERE visited = '0' LIMIT 1;");

$stm->execute();

my @results=$stm->fetchrow_array();

#deleting

$connection->do("UPDATE urls SET visited = '1' WHERE url = \"$results[0]\";");

return $results[0];

}

This way i get the next link. Now back to the while loop. Now, we have the link we want to visit but before we go on, we need to make sure of the following:

- It’s not some kind of image. If it is, there is no meaning in getting the contents since i am surely not going to get any email from that content. It’s all binary.

- It’s not a pdf. Same as above, this is binary so i won’t get any email from that either.

- It’s not an amazon page. Since so many pages add amazon ads, i might get links for amazon products or widget landing pages. There is no meaning at all in getting those pages.

- It’s not a google page. Same as above, due to the Google ads, many links in blogs lead to google pages that have no meaning for this crawler. So, we will ignore those too.

In order to ingore a link here is what we need to do. Let’s take the first example and ignore a jpg file.

if($url =~ /\.jpg$/){

next;

}

This way we can ignore all the above. I won’t get in the detail of it, there is no meaning to do so.

Now we are sure we want to visit the link. So, we are going to use the Mechanize library to retrieve the link’s content. Here is how to do it:

my $mech = WWW::Mechanize->new();

$mech->timeout(30);

$mech->agent_alias( 'Windows IE 6' );

$mech->get($url);

my $content = $mech->content();

Using this simple method we download the page indicated by the link. Notice that we are telling the server we are visiting that we are a “Windows IE 6” browser. Now, that’s nasty, but it makes it a more legitimate request. Now, we have on the “$content” variable the HTML of the link. Let’s parse it for emails:

while($content =~ /\b[A-Z0-9._%-]+@[A-Z0-9.-]+\.([A-Z]{2,4})\b/gi){

if($1 ne "jpg"){

$connection->do("INSERT INTO emails(email, site_seen) VALUES(\"$&\", \"$url\")");

}

}

With this snippet we parse, using regular expressions, for emails. This parsing is done for plain simple emails like “foo@bar.com”. It’s not sophisticated but it surprisingly works! Do notice one thing. We exclude the emails that contain the word “jpg”. I do this because i noticed that all the images on Flickr have a name that looks like an email. This saved me from a lot of 404’s indeed!

Now, onto the links that are contained in this page. We need to extract them first. Check this out:

my @links = $mech->links();

With this simple line we have on the array “links” all the links contained within the page. Isn’t Mechanize awesome? Next thing we are going to loop through them and add those that are not images, amazon etc. to the database in order to visit them later.

foreach $link (@links){

if($link =~ /mailto/){}

elsif($link =~ /google/){}

elsif($link =~ /amazon\.com/){}

elsif($link =~ /\.mp3$/){}

elsif($link =~ /\.bmp$/){}

elsif($link =~ /\.gif$/){}

elsif($link =~ /\.jpg$/){}

elsif($link =~ /\.zip$/){}

elsif($link =~ /\.rar$/){}

elsif($link =~ /\.msi$/){}

elsif($link =~ /\.exe$/){}

else{

$connection->do("INSERT INTO urls(url) VALUES(\"".$link->url_abs()."\")");

}

}

We are done! Now, the script is going to select the next link to visit. As simple as that!

From the above code you can clearly see how easy it is to write a simple crawler. When i put it to action, the url’s table contained only one link. That of a blog listing site. In a couple of hours it contained thousands of links that, most of them, where valid. This is how essentialy a crowler works. Starts from somewhere and expands it’s “web” to neighbouring sites, and then to the neighbours of the neighbours etc.

One more thing i’d like to point out is that this crawler is not sophisticated. It doesn’t check for pages with the same content and links that look very much alike and might point to the same content. For instance, if a site has a way through a getter on the link to change the background color, that would be something that we would like to dodge. The link could be “http://www.mysite.com/” and “http://www.mysite.com/?color=blue”. The content of the two seemingly different links is the same.

All in all it was a simplistic crawler but the principles of crawling are there. If you have any questions / suggestions or find any error i’d be glad to hear from you!

Well explained buddy. Though i have never programmed in Perl, i got a good idea of how it is done.

Don’t forget to use “use strict;”.

And it is best to imitate something other than IE6 – website owners should not think that someone uses IE6.

@Madhur: Hope you find it useful if you decide to take on Perl. But, believe me, especially when dealing with trivial text operations, Perl is your way out 😉

@Alexandr: You are right about the “strict” one. As for the IE6 imitation, what i thought was that in some cases website owners take special care for this browser resulting to send less data in most cases which is good for my crawler 😉 But i guess you are right. Maybe lynx would be better 🙂

Thanks for dropping by guys!

let say instead of email addresses we search the source code for the comment section and then ‘rel=follow’. Would that work in theory with some jerry rigging?

@Donace: It can actually work with anything. The only thing we would need to change is the regular expression, (the one that has =~ ) other than that we can extract any kind of info…

Great… Now i know why i get lot of spam.

I took some part of bot code from donace and improved the bot to drop entrecards automatically everyday 🙂

Getting perl on windows takes more time than installing linux and learning to use it. Seriously.

@Dzieci: I agree with u…

@stratosg: thanks 4 xplainin it so beautifully…. 🙂

@Abishek: Thanks for stopping by!

@stratosg: thanks a lot…I’ve been looking for some simple/sample web spider to get started in Perl/Python…after spending some time what I found is that Perl is best suited for this kinda of job b’coz of the availability of almost anything though CPAN, which you might not get off-the-shelf in case of python. Now I’m clear its THE Perl thats best suited for writing a custom web spider. Your code is really nice and simple. Thanks a lot.

Maybe you can modify this for a SQLite Database and a Download with the complete script?

Hi,

i’m writing a crawler in perl but i get http errors like 404 and this breaks my $mech->get() loop. Did you find a way to avoid http errors to exit your script ?

regards,

peer

peer: If your program dies on http error, try Try::Tiny module to catch exceptions.